Azure Site-to-Site VPN and home network integration

Introduction

Recently, I’ve had to work pretty extensively with various cloud providers.

Many companies I’ve worked with fully integrate their cloud provider accounts into their existing on-premise network. For this blog, I’m going to call this a cloud “enclave”.

The hosts and services in the cloud enclave are, for all intents and purposes, equal to resources in the “on-premise” data center. VMs in the cloud get IP addresses that are routable on the existing, “on-premise” networks and DNS is configured such that cloud and “on-premise” names roll up under the same domain and are universally resolvable. Most cloud workloads are only accessible via the private network.

There are significant advantages to this “enclave” concept.

-

Since services in the cloud are not publically accessible, the security “threat-level” is similar to private, on-premise services.

-

There are not two classes of network/DNS services. Everything is basically on one big happy network.

-

(Arguably) things are more portable, since there’s not reliance on a cloud provider specific way to expose services.

Creating an “enclave” and bridging it to one’s “on-premise” network is a significantly more complicated activity than most tutorials describe. For a home lab, this may seem like overkill, but I consider this a useful exercise as it more closely resembles the setup I’ve seen in many companies.

This blog post describes the configuring a cloud enclave with Azure. I had three major goals with this:

My cloud enclave must

- be routable from my existing home network.

- integrate with my existing DNS solution

- be automated so it could be created/destroyed on-demand

- be minimally disruptive to my existing setup.

Existing Home Lab

First, let’s consider my “On-Premise” home network. I run many services that would be considered essential in a typical corporate network:

- DNS

- LDAP

- Kerberos

- Certificate Management

These services are provided by Red Hat Identity Management (IdM), which is a version of FreeIPA bundled with RHEL.

Additionally, I also have DHCP managed by Red Hat Satellite.

My IP address topology is relatively simple. I have a flat /24 as my main network, although I’ve dabbled with splitting out separate subnets and VLANs for things like baseboard management controllers and storage.

For my enclave, I only need to extend DNS and the routable network into the cloud. DHCP will be handled separately by the cloud, and the other services, e.g. LDAP, and Kerberos, will become accessible by virtue of extending DNS and the routable network.

Designing my IP Space

I have used 172.31.0.0/24 as my home network IP range for many years.

When provisioning private cloud networks, I could choose any IP range in the RFC1918 space, so long as it doesn’t overlap with my “on-premise” network.

I decided to extend my routable network to 172.31.0.0/16, which can be divided into 8 /19 networks, each having approximately 8192 IP addresses.

| Subnet | Assignment |

|---|---|

| 172.31.0.0/19 | On-premise Networks |

| 172.31.32.0/19 | Unassigned |

| 172.31.64.0/19 | Unassigned |

| 172.31.96.0/19 | Unassigned |

| 172.31.128.0/19 | Unassigned |

| 172.31.160.0/19 | Unassigned |

| 172.31.192.0/19 | AWS |

| 172.31.224.0/19 | Azure |

With this layout, my “on-premise” network can remain the same as it’s always been, since 172.31.0.0/24 is a subnet of 172.31.0.0/19. Additionally, I’ll have the potential to use other subnets of 172.31.0.0/19 in case I ever choose to deviate from a flat network.

With a /19, it’s highly unlikely that I’ll use all of the IP addresses in a given allocation. In my situation, this is ideal, since I will never have to worry about running out of IP addresses. In an “Enterprise” situation, I would be more granular in specifiying things (IPv6 can’t come soon enough!).

Designing DNS

Until now, I’ve had one flat DNS zone for all hostnames in my network: private.opequon.net.

I could continue with one flat zone, but through my experimentation, this seems to be more trouble than it is worth as every cloud host will have to register its hostname with my FreeIPA/IdM server.

Instead, it seems worthwhile to leverage each cloud providers internal DNS and delegate a zone to each cloud provider. This way a VM started in Azure can auto register its hostname, without any on-going effort on my part.

I can then bridge together the zones of my existing “on-premise” network and each cloud zone using DNS forwarders. My existing “on-premise” zone can remain untouched.

What I’ve come up with for my network is:

| Zone | Purpose |

|---|---|

| private.opequon.net | “On premise” Hosts |

| aws.private.opequon.net | AWS Hosts |

| azure.private.opequon.net | Azure Hosts |

For Azure, there is a problem with this set up and reverse DNS, but I’ll address that in the “Problems and Improvements” section.

Critical Components

With my DNS and IP ranges set, we can move on to the critical components of this “enclave”. While there are many different “bits” that need to be configured, the two critical components that make this work are

- Site-to-Site VPN

- DNS Fowarding

Site-to-Site VPN w/ Libreswan

Azure offers a Site-to-Site VPN object that uses IPSec tunnels to create a VPN.

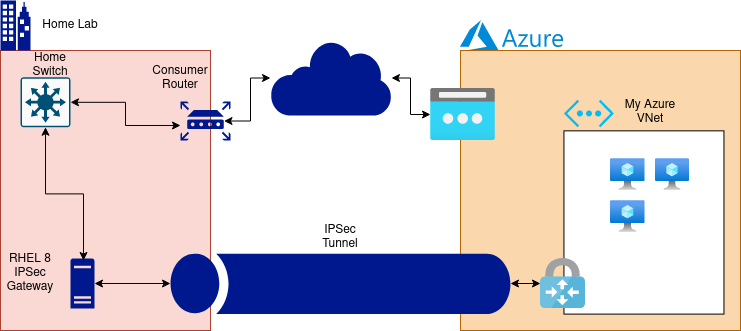

For the “on-premise” side of the tunnel, I use the Libreswan implementation that’s bundled with RHEL.

Visually, my configuration looks like this:

Azure does not directly support Libreswan, but does offer an Openswan option. Libreswan is a fork of Openswan and their configuration format has diverged somewhat, but it’s a good starting point.

For reference on configuring my RHEL gateway host, Using LibreSwan with Azure VPN Gateway was the only useful blog post I’ve been able to find.

DNS Forwarding

At time of writing, Azure does not have a first-class DNS forwarder service. Therefore, I have to build this component. Fortunately, it is straightforward to build a DNS forwarder using a tiny VM and DNSMasq.

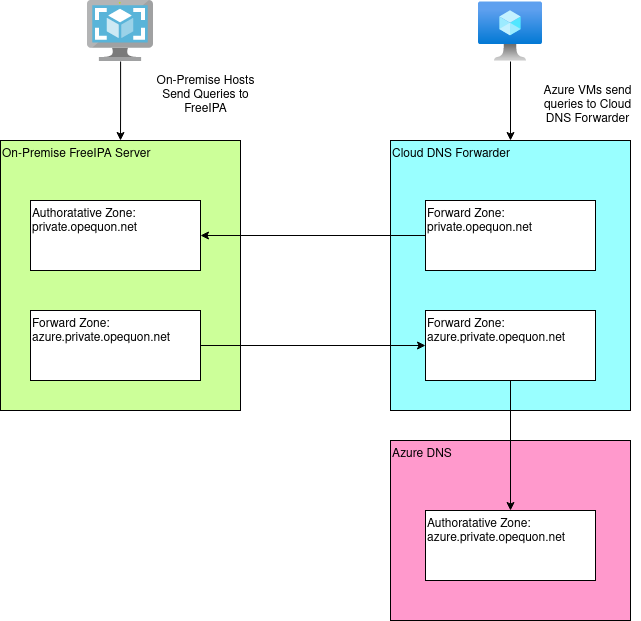

The solution looks like this:

In Azure, I create a private zone (azure.private.opequon.net) and link it to my VNET. With that, hosts inside the VNET can query the Azure DNS servers and resolve hostnames in my private zone.

However, hosts outside of the VNET, like my on-premise network, cannot access Azure DNS!

Having the DNS Forwarder VM allows me to, essentially, proxy DNS queries between my on-premise FreeIPA and Azure DNS.

So that my Azure VMs also can resolve on-premise names. The DNS Forwarder VM also sends DNS queries back to FreeIPA for certain zones. To ensure all Azure VMs have this by default, the VNETs primary DNS server is changed from the default Azure DNS to my DNS Forwarder.

Let’s set everything up!

Manual Steps

Most things are covered by the automation, but a few items I had to manually configure due to lack of available automation modules and/or APIs.

Both of these items are one time setup, and neither incure a cloud cost.

Router Setup

My Libreswan endpoint is within my network and thus is behind my router. Therefore, I need to configure my router to forward certain ports to my Libreswan host.

| Port | Protocol | Destination |

|---|---|---|

| 4500 | UDP | Libreswan Host |

| 4500 | TCP | Libreswan Host |

| 500 | UDP | Libreswan Host |

If you Libreswan endpoint is at the edge of your network (i.e. it holds a public IP address), then this step is not necessary.

FreeIPA Forward Zones

At time of writing, there are no Ansible modules allow configuration of Forward Zones in FreeIPA/IdM. I may just have missed it, not sure.

For these examples to make sense, here is my relevant configuration:

- DNS Forwarder VM IP address: 172.31.225.4

- FreeIPA/IdM Server Hostname: ipa.private.opequon.net

- Azure subdomain: azure.private.opequon.net

First, I create a DNS Forward Zone in FreeIPA with a forward policy of

‘only’. This ensures that queries for azure.private.opequon.net are

forwarded to the DNS Forwarder VM.

[root@ipa ~]# ipa dnsforwardzone-add --forward-policy=only --forwarder=172.31.225.4 azure.private.opequon.net.

Server will check DNS forwarder(s).

This may take some time, please wait ...

ipa: WARNING: DNS server 172.31.225.4: query 'azure.private.opequon.net. SOA': The DNS operation timed out after 10.0006103515625 seconds.

Zone name: azure.private.opequon.net.

Active zone: TRUE

Zone forwarders: 172.31.225.4

Forward policy: only

The warning about DNS operation timed out will occur if you’re performing this step BEFORE provisioning the entire enclave with the rest of the automation. Ignore it.

Next, to make the forward zone effective, I need a “glue record” to include it in my primary zone. This is just a simple NS record that points back to my IPA server.

[root@ipa ~]# ipa dnsrecord-add private.opequon.net azure --ns-rec=ipa.private.opequon.net.

Record name: azure

NS record: ipa.private.opequon.net.

Once the rest of the enclave is configured, this IdM configuration should immediately (or pretty close to immediately) work.

Automation Code

All the code described here is on GitHub

The automation I have for this is written in a combination of Terraform and Ansible.

Two main scripts call the pieces in unison:

build.sh- Creates all cloud infrastructure in Azure and setups Libreswan and the DNS Forwarderdestroy.sh- Tears everything down.

The build.sh and destroy.sh scripts assumes that the Site-to-Site

VPN tunnel is being configured on the same host that is running the

script. Some modification of the Ansible will be required if you want

to run it from a different host.

Sometimes the Azure APIs are very slow and cause the Terraform code to timeout! Luckily, all this code is idempotent, so if that happens, it can just be run again.

Terraform Files

I’ve attempted to format and organize the Terraform files in a way that is easy to understand, rather than strictly to normal.

The main objects are in the following files:

main.tf- Objects for the site-to-site VPNsubnets.tf- Subnets and Network Security Groupsdns.tf- DNS Private Zonedns_forwarder.tf- DNS Forwarder VM

Then there are more normal Terraform files, that have what you’d expect.

outputs.tfproviders.tfvariables.tfversions.tf

main.tf

main.tf contains the Site-to-Site VPN resources, and the bare

minimum pre-requisites.

azurerm_resource_group defines the resource group. Every Azure

resource by this automation will live in this resource group.

azurerm_virtual_network defines our “Azure Virtual Network”, aka VNet,

to which we will add subnets. The VNet has variables for the

overarching address space as well as DNS servers for everything. In

this automation, I’m overriding the default Azure DNS with the

statically assigned IP address of our DNS Forwarder.

azurerm_subnet creates the subnet required for the Site-to-Site VPN.

This is here, instead of in subnets.tf, because it’s required for

the VPN connection. Also, it must be named GatewaySubnet,

otherwise dependant resources throw an error.

azurerm_local_network_gateway provides details for our “Local”

network, meaning the “On-Premise” side of the Site-to-Site VPN tunnel.

azurerm_public_ip provides a public IP address for the Azure side of

the Site-to-Site VPN tunnel.

azurerm_virtual_network_gateway provides the Azure-side of the

Site-to-Site VPN tunnel. It links together the Public IP address and

the Azure GatewaySubnet.

azurerm_virtual_network_gateway_connection creates the VPN. It

links together the azurerm_virtual_network_gateway,

azurerm_local_network_gateway, and the VPN Shared Key and creates

the VPN configuration on the Azure side. Once this resource has

finished provisioning, it is possible to start LibreSwan on the

on-premise side and activate the connection.

subnets.tf

subnets.tf contains all azurerm_subnet definitions, except for the

GatewaySubnet.

In the public repo, I have just one subnet for my “main” network. In my personal lab, I add separate subnets for OpenShift and other things I run in the cloud.

dns.tf

dns.tf does some minimal DNS work.

azurerm_private_dns_zone actually creates our private DNS zone in

Azure.

azurerm_private_dns_zone_virtual_network_link then links that DNS

zone to our VNet. I have the registration_enabled flag set to true,

so all Virtual Machines connected to the VNet will automatically have

their hostnames registered in our private zone.

dns_forwarder.tf

dns_forwarder.tf defines the Virtual Machine that will be our DNS forwarder.

azurerm_network_interface defines it’s network interface and,

importantly, its static IP within the VNet.

azurerm_linux_virtual_machine is the virtual machine definition.

Currently uses a RHEL 8 VM, but this could be changed to almost any

RHEL-like VM and still work.

azurerm_network_security_group is a simple network security group

for the instance.

Ansible Playbooks

There are three Ansible playbooks. The build.sh and destroy.sh

scripts pass the outputs of the Terraform build into these, so they

have no separate inventory or variable files.

azure_dns_forwarder.yaml- Configures the DNS forwarderazure_ipsec.yaml- Configures on localhost, the on-premise side of the VPN tunnelazure_ipsec_remove.yaml- Destroyes the on-premise side of the VPN tunnel

Sample terraform.tfvars

A sample terraform.tfvars is included.

# What Azure Region to use for all resources

azure_region_name="eastus"

# Resource group for all resources

resource_group_name="ENCLAVE-EAST"

# Default name for most resources

default_resource_name="ENCLAVE-EAST"

# Address Space and DNS servers for VNET

vnet_address_space=["172.31.224.0/19"]

vnet_dns_servers=["172.31.225.4"]

# Subnet for the Azure side of the Site-to-Site VPN

gateway_subnet=["172.31.224.0/24"]

# Names for Local Network Gateway and resources related to

# the on-premise side of the Site-to-Site VPN

local_network_gateway_name="ONPREM"

on_premise_name="ONPREM"

# On-Premise network range and public IP.

on_premise_private_network_ranges=["172.31.0.0/19"]

on_premise_public_ip_address="108.56.139.185"

# DNS Zone for Azure Private DNS

private_dns_zone_name="azure.private.opequon.net"

# Static Private IP address of DNS Forwarder.

dns_forwarder_ip_address="172.31.225.4"

# Name for thePublic IP address for the Azure side of the VPN

vpn_azure_public_ip_name="VPN-EAST"

# Name and range of main subnet in Azure

subnet_main_name = "EAST-MAIN"

subnet_main_cidr_ranges = ["172.31.225.0/24"]

# DNS information for DNS Forwarder VM

on_premise_dns_zones = ["private.opequon.net", "0.31.172.in-addr.arpa"]

on_premise_dns_server = "172.31.0.101"

# The Azure Private DNS server is a static IP address

# See: https://docs.microsoft.com/en-us/azure/virtual-network/virtual-networks-name-resolution-for-vms-and-role-instances#considerations

azure_private_dns_server = "168.63.129.16"

# The reverse DNS doesn't work, currently, for on-prem resolving Azure PTR records.

vnet_dns_reverse_zones = ["225.31.172.in-addr.arpa"]

# Shared Key for VPN

# DO NOT CHECK THIS INTO GIT, it's only here to show what the variable name is!

vpn_shared_key="mysecretkey"

Problems and Improvements

There are still problems with this. Maybe I will solve and update when I have the time.

Lack of security groups

This configuration is pretty wide open internally. I’m doing very little segregation of Azure subnets that would be common in an Enterprise organziation.

I still feel pretty safe with this configuration, because it’s only really accessible from my home network, but if using this as a pattern for something more serious. It’s worth considering additional network segmentation.

Reverse DNS

Try as I might, I cannot get Reverse DNS forwarding to Azure private DNS working with FreeIPA/IdM.

When I set up a forward zone in FreeIPA/IdM, it expects that the destination DNS to have a SOA record equivalent to the forward zone. So, if my forward zone is 225.31.172.in-addr.arpa, then the DNS server I’m forwarding to is expected to have an SOA record for 225.31.172.in-addr.arpa.

Azure DNS appears to dump all PTR records into a big in-addr.arpa zone. So, there are no appropriate SOA records and FreeIPA/IdM refuses to set up the forward zone.

Other DNS implementations, like dnsmasq, can cope with this. I suspect because they textually parsing the DNS query at the server before sending it on, but I’m not entirely sure. A bit more research is required.

At any rate, Reverse DNS does not automatically work with this solution, which is a problem.

Plain text VPN key

This example just has the shared VPN key as plain text. For a home lab, this is mostly acceptable, although still not ideal. For an enterprise solution, this VPN key should be vaulted and highly protected.

Routing weirdness

In this example, the IPSec tunnel is not at the edge of the network, but is instead inside my private network. My consumer router forwards certain ports to the IPSec host in order to make the connection work.

When I’m on the road (a rarity these days), I use the VPN client/server provided by my consumer router, and there are routing difficulties when trying to use resources in the enclave when I’m connected to my router’s VPN.